ABSTRACT

The safe operation of dams is ensured by monitoring systems that collect periodic information on environmental conditions (for example, temperature and water level) and on the structural response to external actions. In newly built or retrofitted facilities, large networks of sensors can take daily measurements that are automatically transferred to servers. In other cases, additional information can be acquired, occasionally or systematically, through emerging drone-based non-contact full-field techniques.

The measurements are processed by various analytical and machine learning tools trained on historical data sets, capable of highlighting any anomalous recordings. Monitoring data can also support the accurate calibration of a physics-based model of the structure, usually built in the finite element framework. The analyses carried out by the digital twin allow the experimental database to be expanded with the displacements evaluated in the event of extreme environmental conditions, damage or collapse mechanisms never occurred before.

This contribution illustrates an integrated approach to the safety assessment of existing dams that combines experimental, computational and data processing methodologies. Attention is particularly focused on model calibration procedures and on the uncertainties that influence the characteristics of the joints. The presented results of the validation studies performed by the Authors on benchmark and real-scale problems highlight the merits and limitations of alternative approaches to data exploitation and remote measurement.

1. Introduction

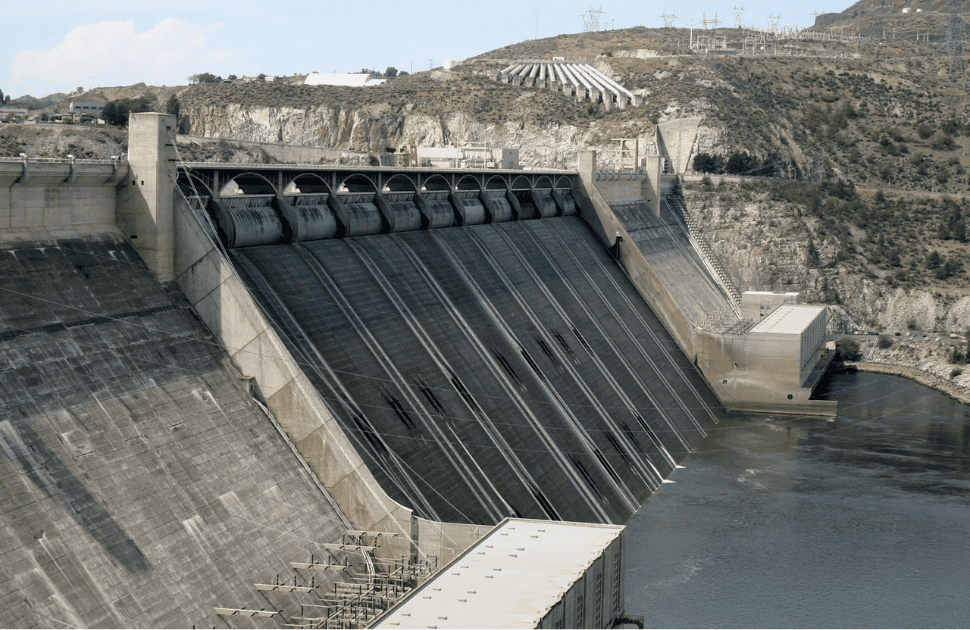

Dams constitute an important asset for many countries. Concrete dams located in the Alpine region, built until the 1970s, provide clean energy and supply drinking and irrigation water. They also contribute to controlling floods that are expected to increase in frequency and intensity due to climate change. In addition, hydropower plants contribute to providing balancing services that stabilize the electricity grid, fostering the exploitation of non-programmable renewable energy sources (like solar and wind) to achieve the European decarbonization targets. Therefore, despite their aging, existing dams are becoming increasingly important as they comply with the energy and environmental policies implemented in recent years to address the present concern about climate change.

Dam monitoring systems obtain information on the environmental conditions (air and water temperatures, water level in the reservoir, precipitation) and the deformation of the dam as loads change [1–4]. The number and location of the measurements and the recording frequency vary depending on the facility. The collected data are transmitted, stored, and then processed by various analysis tools.

Traditionally, in this context, monitoring data are managed by the so-called statistical, or Hydrostatic Seasonal-Time (HST) model developed by EDF (Électricité De France) in the late 1950s and subsequently improved to reliably support structural health evaluations [5–10]. HST approach approximates the structural response by the combination of pre-defined functions that consider the effect of water level, temperature and aging separately. Parameter values are calibrated for each dam based on available measurements.

Monitoring data can be interpreted alternatively by Machine Learning (ML) approaches based on more flexible algorithms [11,12]. Neural Networks (NN) [13–16], Extreme Learning Machines (ELM) [17], Support Vector Machines [18–20], Gaussian Process Regression [21], hybrid Deep Learning models [22] and Boosted Regression Trees (BRT) [23–25] have proven to be effective prediction tools for dam monitoring when properly optimized and validated. Comparison studies have also demonstrated that no ML approach outperforms the others in all situations [26].

However, accurate predictions alone do not constitute a complete surveillance system. Establishing the limits within which measured observations are considered reliable is essential, allowing anomalous behavior to be detected. Currently, the definition of thresholds is mainly based on a multiplicative factor in combination with the standard deviation of the residuals of the predictions. In this context, Salazar et al.[27] propose updating the limits each year, introducing a weighted average of them. This analytical approach allows the identification of individual outliers but neglects the multidimensional context. To overcome this limitation, Mata et al. [28] have recently introduced a methodology that complements the traditional approach through the consideration of moving averages and moving standard deviations of residuals. In addition, they proposed a second approach that operates in a multidimensional framework and employs a clustering technique to identify anomalies [29]. Alternatively, Chen et al. [30] formulate a multi-target forecasting method that exploits the internal relationships between target variables.

The source of any anomalous reading should also be identified. Outliers may be associated with minor events (for example, the malfunction or need for recalibration of some instruments) or load combinations that have never occurred before and, therefore, are not included in model training. Anomalies could otherwise indicate ongoing degradation processes [9,31–33]. ML tools alone cannot solve these problems, especially since measurements in damaged conditions are generally not available. Dam failures are rare, and information concerning any past event is difficult to transfer from one situation to another. Unlike other infrastructures [34,35], practically all dams represent single prototypes due to their peculiar geometry, geomorphological and ambient conditions.

This issue can be addressed by constructing prediction models of the structural response to simulate the most likely critical scenarios [36,37]. Finite Element (FE) approaches are mainly used for this purpose, due to their generality and flexibility [38–42]. Potentially, the models can also account for uncertainties, introducing probabilistic distribution of the material properties and environmental conditions. However, stochastic methodologies have been mainly applied to simplified models due to their high computational costs, only partly mitigated by the consideration of analytical surrogates trained on the results of a limited number of finite element simulations [37,43–45]. Reduced models are also used for sensitivity studies and parameter identification procedures [46–55].

The presence of artificial (construction and contraction) and natural (i.e., cracks) joints constitutes the main non-linearity and uncertainty source of operated dams [9,42,56–63]. Joints represent surfaces of potential displacement discontinuity, often introduced on purpose to limit the stresses produced by thermal action and other expansion mechanisms [31–33,42,57], and by the interaction with the foundation [62].

Calibrating the mechanical characteristics (friction, roughness, cohesion, fracture energy) of dam joints can be challenging. The most direct methodology is based on the consideration of core samples extracted through the considered interfaces and subjected to large-scale tests under cycling loading. In fact, it is necessary to take into account both the characteristic dimension of the inherent heterogeneity of the dam concrete and contact surfaces, and their variability over time due to the external (ambient) action [61–65].

Non-destructive approaches have been proposed to detect cracks and determine interface parameters using the information collected on site during operation [66–70]. Generally, strain-gauges monitor joints opening and sliding, while other equipment (such as sonic and ultrasonic tomography and georadar) is used for surveys, mapping and control of the propagation of existing cracks. However, existing dams are not always equipped with sensors that provide detailed (in space and time) information about the relative displacements at joints. Data can be extracted from full-field measurements [71–78], e.g. performed by Digital Image Correlation (DIC), photogrammetry and Time-of-Flight (ToF) techniques capable of providing adequate precision. DIC application to an experiment similar to that described in Ref. [61] allowed for measuring crack opening displacements with 0.1 mm tolerance [79].

Digital images and photogrammetry data can be collected by mounting optical sensors on drones, or Unmanned Aerial Vehicles (UAVs), that approach the target locations from different directions [80–92]. Several studies have investigated the accuracy and limitations of these methodologies. Both laboratory and on site applications have been considered, estimating the motion of the UAV based on information from background stationary features [88,89]. The effect on image quality of ambient actions, such as wind and its fluctuations, has been analyzed in Ref. [90]. UAV photogrammetry, used to reconstruct the geometry of dams [84–87], showed that the accuracy of the measurements depends on the optimal distribution of Ground Control Points (GCPs). In Ref. [84], the vertical contraction joints could not be observed on the horizontal sections of the 3-Dimensional (3D) scene. However, they could be identified in the Red-Green-Blue (RGB) information scale of the point cloud, being darker than the surrounding region. Three-dimensional DIC was also applied to images acquired with two cameras mounted on a UAV, achieving an absolute error smaller than 0.34 mm [91]. In Ref. [92], it was shown that rapid changes in illumination brightness and shadow on the target area can produce blurry images. However, the quality of the acquired data can often be enhanced by correction algorithms, possibly based on ML tools implemented in parallel units to improve computing efficiency [93–97].

This contribution aims to provide an overview of the structural health assessment of dams, lightning the interplay between different competences and methodologies involved in this complex topic. In particular, Section 2 focuses on the definition of suitable interpretation models and on the calibration of their main parameters based on historical data series, while Section 3 is devoted to the non-destructive characterization of dam properties by full-field measurements per formed on site. Finally, closing remarks are reported in Section 4.

2. Structural health monitoring of existing dams

Periodic inspections are standard practice in the monitoring of existing dams [98,99]. Piezometers, thermometers, plumb line trans ducers and optical coordinometers are the main instruments used to quantify external actions and structural response. Collected data are processed in almost real time to promptly identify any abnormal behavior. For this purpose, the measurements are compared with models, which can be statistical, deterministic, or hybrid. The use and merits of these alternatives are compared in this section through the analysis of the results of some relevant applications developed by the Authors.

Fig. 1. (a) Partition of a two-dimensional space (T, WL) by recursive binary splitting; (b) perspective plot of the prediction surface; (c) partition tree.

2.1. Data-driven models

Traditionally, dam monitoring data are managed by the HST model. This approach approximates the structural response by the combination of pre-defined functions, with coefficients calibrated on the measurements. The contribution to the overall displacements generated by the water depth in the reservoir is usually defined by polynomials, while the effect of temperature and aging is accounted for by harmonic and logarithmic (or linear) functions, respectively [6–11].

Alternative ML approaches like NN and ELM [13–17] rely on more flexible functions, and weights determined from the available monitoring data. BRT do not consider any interpolation [23–27], but these models produce accurate predictions with good generalizations capabilities. This methodology was therefore adopted in the present demonstrative study as a valid alternative to HST.

BRT combine regression trees, which are part of the decision tree methods, with boosting, a technique that sequentially builds and merges a series of models. Modern decision trees are described in detail in Refs. [23,24]. They subdivide the observations into a certain number of regions according to the values of the input variables, assuming that the system response can be considered constant within each subdomain. For example, Fig. 1(a) visualizes one possible partition of the domain defined by the variables WL (water level) and T (temperature). Fig. 1(b) displays the piecewise constant approximation of a displacement component that represents the structural response. The partitions are defined during the training phase based on a heuristic greedy approach, i.e. the BRT algorithm selects the best option available at the moment to identify the splitting variable and the splitting point that define the regions. The difference between the actual output values and the mean values associated with each region defines an error-index, which is minimized to define the node of the regression tree shown in Fig. 1(c). The recursive binary partitioning process is repeatedly applied to each new region until some stopping criterion is satisfied.

Typical input and output data are for instance represented in Figs. 2 and 3. The measurements refer to the concrete arch dam recently proposed as benchmark problem by the International Committee on Large Dams (ICOLD) [100].

The predictions shown in Fig. 3 are produced by BRT trained first on an initial monitoring period of 5 years [101]. The training is then extended from year to year as new data becomes available to obtain the next one-year predictions.

Fig. 4 visualizes the difference between measured and predicted values of the training and test sets. The latter starts from the sixth year. The red dashed lines indicate the warning thresholds. The limits are updated annually by assuming that the density function of the residuals of each year follows a normal distribution with mean value μ and standard deviation sd. The interval μ ± 2.5sd is considered in this application. Monitoring readings that fall within the predefined range are classified as regular. Otherwise, they represent anomalies.

In particular, for each of the three years before the one for which the limits have to be defined, the mean μi and standard deviation sdi are computed on the residuals between the predictions calculated when the year is part of the test-set and the measurements. Finally, the estimated μ and sd values are obtained as a weighted average of the three μi and sdi, to which weights are applied according to the reference year, equal to 1, 1/2 and 1/3 from the most to the least recent one respectively. This average is considered because the residuals of only one year could be biased if they correspond, for example, to extreme temperature conditions or water levels not previously considered in the training. Increasing the amount of training data leads to progressively more accurate predictions. The reduction in residuals, in turn, leads to narrower alert levels.

The alert system is verified by introducing outliers into the data and checking whether the disturbances can be detected. In this application, 20 % of the displacements relevant to the last (13th) year were randomly selected. The data were modified to simulate outliers by randomly adding or subtracting 8 mm from the original measurements. All anomalies were correctly identified by the system. In fact, they correspond to the residuals beyond the limits visible in Fig. 4. Among the remaining normal data, just two false outliers were identified in the 10th year. Overall, the method proves to be effective in identifying the disturbances although the origin of the anomalies often remains unclear unless only the recordings of a specific device are affected. This problem can be solved by supplementing data-driven investigations with physics-based interpretation models. Their definition and calibration based on monitoring data is introduced next.

Fig. 2. Air temperatures (acquired by 2 sensors) and water level of the concrete arch dam proposed as ICOLD benchmark problem [100]: daily measurements.

Fig. 3. Measured and predicted displacement near the crest of the concrete arch dam proposed as the ICOLD benchmark problem [100].

Fig. 4. Difference between measured and predicted values in Fig. 3, and the corresponding warning levels (red lines) updated year by year. (For interpretation of the references to color in this figure legend, the reader is referred to the Web version of this article.)

2.2. Physics-based predictive models

Physics-based models of the structure are key tools to reliably interpret the measurements and identify the source of any anomalies. To be effective, the models must be accurately calibrated to reproduce the real system response. These predictive tools can then be used to simulate the most likely critical scenarios and produce synthetic pseudo-experimental data to improve the surveillance procedures.

The FE approach is mainly used in the context of dam engineering. This methodology is also applied to the present case study, which refers to a real arch-gravity dam, schematized in Fig. 5. Notably, the 3D discretization includes a large portion of the foundation in order to correctly reproduce the system response to external actions. The geometry of the dam body is based on the original design drawings, while the rock portion is approximated starting from the available topographic data.

The mechanical and thermal properties of concrete and rock are initially defined on the basis of geomechanical investigations and laboratory tests carried out on specimens produced on the construction site.

However, a range of variation needs to be considered due to initial uncertainties and potential changes over time. Therefore, parameter values may be optimized considering the data recorded during the lifetime of the structure, derived from seasonal variations of the external actions.

Daily time series of the reservoir level and air and water temperatures are available. These data are used as input to the FE analyses. The thermal and mechanical problems defined on the discretized domain are then solved separately, following the operational procedures proposed in Ref. [41]. However, unlike the simplifying assumptions introduced therein, actual time series data were used in the present study rather than approximated functions.

The thermal problem is approached by a transient analysis carried out over the entire monitoring period, solving the discretized (in space and time) version of the heat diffusion equation:

In relation (1): T(x, y, z, t) represents the temperature field; t is time; x, y, z are the spatial coordinates; k (thermal conductivity), ρ (density) and c (specific heat) are material parameters, usually assumed constant.

Temperature values are imposed on both dam faces, with different values for the wet and dry areas. If surface thermometers are installed, the recorded temperatures are interpolated linearly and assigned to the relevant mesh nodes. Otherwise, as in the present case, the average daily air temperature is imposed to the dry faces while a single value is assigned to the wetted boundary, equal to the water temperature recorded at a certain depth (e.g., 5 m). The temperature distribution inside the dam body at a given time is represented in Fig. 6. The computational results presented here are obtained by a widely used commercial code [102].

Transient results can be strongly influenced by the initial conditions. Therefore, a preliminary analysis is performed for an initial period of 5–6 years until a steady state is achieved. In this phase, the daily air and water temperatures correspond to the annual regression of the average temperature recorded on each day of the year, obtained from the entire historical series. The water level that defines the wetted surface (to which water temperatures are applied as boundary conditions) is also calculated from the annual regression of the data series at the basin level. Subsequently, the daily average of the measured air and water temperatures is assumed as input data. When possible, the temporal series are validated by the comparison with the temperature values recorded by thermometers placed on the structure.

Fig. 5. Finite element discretization of an existing dam and its foundation.

Fig. 6. Temperature distribution within the dam body resulting from thermal analysis in wintertime: middle cross section.

Fig. 7. Deformed configuration of the analyzed dam under external action; displacement amplification factor 3000. The color scale refers to the upstream- downstream displacement component. (For interpretation of the references to color in this figure legend, the reader is referred to the Web version of this article.)

Fig. 8. Schematic location of interfacial gauges.

Temperature variations ΔT induce thermal strains εTii (i = x, y, z) in the dam body:

In relation (2), α represents the thermal expansion coefficient, which is a material constant. Thermal strains superimpose on the deformation produced by the mechanical (gravity and hydrostatic) loads, depending on the constitutive law of the bulk materials. Concrete is usually assumed isotropic, with linear stress-strain relationships characterized by the longitudinal elastic modulus Ec and the lateral contraction ratio νc. Rock is generally anisotropic, but in most analyses its mechanical contribution can simply be related to the mean parameters Er and νr. These moduli can vary from place to place due to the local morphology of soils, or to degradation phenomena.

The constitutive parameters define the entries of the so-called stiffness matrix of the system, K, and the discretized version of the equilibrium equations:

where u represents the vector that collects the displacements at the discretization nodes and R is the vector of equivalent nodal forces, counterpart of the self-weight of the structure, the hydrostatic pressure, and the thermal effects.

Monolithic dams usually exhibit a reversible response under normal operating conditions. Therefore, all elements of K matrix are constant, and the equation system (3) is linear. However, many dams include joints placed between the construction blocks and between the structure and its foundation. This also applies to the present case study. Usually, joints are only grouted after concrete setting. As a result, the tensile load-bearing capacity is almost null at these interfaces, characterized by non-linear frictional contact. In this case, the stiffness matrix K needs to be continuously updated with the opening/closure of the joints, and the equation system (3) is solved iteratively while material interpenetration is prevented by a penalty approach.

Relative movements between the blocks of the dam analyzed in this work are for instance emphasized in Fig. 7 by a large amplification factor applied to the displacements resulting at one time-step during the numerical analysis.

In the reality, relative displacements are measured on site by interfacial gauges, which are distributed as represented in Fig. 8. G1 and G2 recordings are compared in Fig. 9 with the corresponding simulation results, obtained by assuming the same friction coefficient μ for all joints. The agreement is fair for G1, considering the different sampling times of the measurements and computations, poorer for G2.

Contraction joints present different configurations. Shear keys have significant resistance in the upstream-downstream direction, while other alternatives are smoother and weaker. As a result, the coefficient μ can vary over a relatively wide range. This parameter can also vary in space and time, due to different grouting degrees and degradation of the contact surfaces subjected to cyclic actions [56–63]. The relevant values can be determined and updated over time by the calibration procedure based on the monitoring data collected during the lifespan of the dam, as introduced in the next section.

2.3. Parameter calibration and sensitivity analysis

The parameters entering the FE model of the dam-foundation system can be identified through iterative optimization. A discrepancy function J is introduced to define the distance between a suitable selection of n measured displacements um, and the corresponding simulation results uk(p). The latter are functions of the sought properties (material and interface parameters and their distributions) collected by vector p. The best parameter set minimizes J(p), which often corresponds to:

The minimization of J(p) is performed within the limits (physical, or suggested by experts in the field):

and under the condition that the computed displacements uk (p) satisfy the equation system (3). In (4), the coefficients wk represent weights, or normalization factors imposed on the displacement components.

An adequate selection of the measurements to be included in (4) can improve the robustness of the identification procedure and the reliability of its results, which are influenced by measurement noises, inaccuracies in the simulation model, uncertainties of the physical system. In fact, the discretized version of a solid is usually stiffer than its continuous counterpart unless a very fine mesh is implemented, with a significant increase in calculation times and costs. Therefore, a balance is usually sought between accuracy and effectiveness.

On the other hand, the detailed morphology of the rock beneath the dam foundation is often only partially known. In addition, the actual temperature distribution is not reconstructed accurately when there are few or no thermometers on the concrete surface and, therefore, the assumed boundary conditions correspond to the average daily air and water temperatures. Actually, the daily temperature history depends on the season, since the number of hours with a temperature close to the maximum increases in summer, while the temperature in mostly close to the minimum in winter. Moreover, solar radiation is not always negligible.

However, not all external actions and model parameters affect the measurable quantities to the same extent. Low sensitivity means that the parameter is difficult to be accurately identified but, on the other hand, it also implies that even large errors do not introduce significant discrepancies between the predicted and actual structural response.

Sensitivity analysis can be performed on all available models of the system under investigation. Relative importance indexes of the influence of environmental (predictive) variables on the measured quantities can be defined for the heuristic BRT approach (Section 2.1) according to Friedman’s proposal [103]. The index considers the relative presence of each predictor, selected as the best partition during the training phase, and the error reduction achieved correspondingly. The graphs in Fig. 10 show the different effects produced by the main ambient actions on the two dams considered in the present work as reference tests for the proposed methodologies.

Other methods, such as Linear or Logistic Regression, consider quantifications based on the model coefficients. A methodology applicable to any algorithm, introduced by Breiman [104] for Random Forest, computes the increase in the prediction error after the permutation of the predictor variable whose relative importance is to be estimated. A significant increase in error indicates an important predictor. In all cases, importance indexes can guide the variable selection and the possible reduction of the model complexity.

Sensitivities can be evaluated from the physics-based model as well. The deformation induced by temperature variations depends on the thermal expansion coefficient α, relation (2), while that generated by the hydrostatic load mainly reflects the longitudinal moduli Ec and Er. Numerical studies also indicate that displacements at the dam crest commonly show greater sensitivity to changes in Ec, while displacements at the base are equally dependent on Er.

The considerations listed above can be combined with the specific features of the HST model (Section 2.1) to define a computationally convenient sequential approach to parameter identification with improved effectiveness and reduced computational efforts. The proposed methodology is here applied to the model of the real arch-gravity dam schematized in Fig. 5.

The HST approach makes it possible to distinguish the hydrostatic, thermal, and ageing contributions to the overall displacement of each monitored point. This decomposition permits to design a specific identification procedure that divides the unknown parameters into two groups, with significant computing savings. In fact, the time and cost required to iteratively minimize function (4) under conditions (3) increase exponentially with the size of the vector p since the gradients of the discrepancy function J are evaluated numerically, by finite difference schemes, in the FE context.

In the present proposal, the elastic moduli are estimated first by comparing the displacements due to a monotonic variation of the hydrostatic load in the FE model with the relative contribution extracted from the statistical model. A second inverse problem is then formulated and solved to estimate the thermal coefficient α. In this case, the seasonal contribution of the HST models is compared with the deformations produced in the dam body by temperature variations over a one-year period, evaluated by FE analysis.

Aging and other degradation phenomena may be reflected in the variation of parameter values over time, i.e. p = p(t) [31–33]. If time dependency is not explicitly taken into account in the formulation of the optimization problems, recalibration performed at certain time intervals can be part of the procedures implemented for the dam surveillance and for the definition of a digital twin of the structure through model updating.

In the computations mentioned so far, the friction coefficient is usually kept constant and defined based on previous experience. However, this assumption may produce some inconsistencies, for example shown in Fig. 9. This and other issues can be solved by the synergetic combination of suitably defined modelling and experimental techniques [105]. In particular, when the measurements required to perform inverse analysis in a robust way are not available, or not provided insufficient numbers, additional information can be obtained from the full-field remote procedures introduced in the next section. In turn, an accurately calibrated simulation model facilitates the interpretation of the results of the full-scale non-destructive testing produced by the seasonal variation of the external actions on the dam [73].

Fig. 10. Relative importance indexes of the main input variables for two different dams: a) the ICOLD benchmark problem [100]; b) the real one schematized in Fig. 5. The considered predictors correspond to: water level (WL), temperature (T), date (day: D; month: M; year: Y).

3. Full-scale non-destructive in-situ testing of dams

The geometrical configuration of dams follows seasonal changes in temperature and water levels. The relevant information can be exploited to determine the main mechanical characteristics of the structure and their evolution over time. Large sensor networks installed in newly constructed structures enable detailed monitoring of the facility response [30]. However, the number of measuring devices may be limited in long-existing dams, and their placement may not be optimal for the reliable calibration of simulation models, and for anomaly detection.

Additional information can then be acquired, occasionally or systematically, by non-contact full-field measurement techniques [71–77], possibly by mounting the appropriate equipment on drones [81–92], Fig. 11, to overcome the problem of difficult access to some structures or dam sectors.

Detailed temperature distributions on the dam surfaces can also be obtained through infrared thermography, using ML algorithms to analyze the color images acquired by UAVs. This methodology is currently in use in various application fields and can provide an accuracy of 1 ◦ C [106–108].

Vision-based techniques can also measure 3D displacement components on the dam surface and joints. A digital image is a two-dimensional projection of the 3D scene observed by the camera lens. 3D information is retrieved using more than one camera, in stereo arrangement. Alternatively, multiple images of the Region of Interest (RoI) are acquired using one camera and processed with photogrammetry. ToF sensors can also be a good option whenever 3D reconstruction of dam portions is needed, e.g. at the joints. This technology is based on infrared radiation rather than image acquisition. In principle, other 3D scanning techniques such as fringe projection or active stereoscopy could be applied [109,110], but the environmental conditions typical of large dams are usually not suitable for these approaches.

The main characteristics of these alternative methodologies are illustrated in the following. Their merits and limitations are highlighted by the results of the preliminary validation studies carried out by the Authors.

Fig. 11. Vision equipment mounted on drone.

3.1. Digital Image Correlation

3D Digital Image Correlation (DIC) techniques combine information provided by two calibrated cameras at a relative position that remains fixed during image acquisition. Cameras can be mounted on drones as UAV fluctuations do not affect the measurements, thanks to the capability of the 3D DIC to correctly evaluate the target displacement field neglecting rigid motions (roto-translations) between the camera and the target.

A digital image is composed of an array of discrete pixels, and each pixel represents the brightness value of each point of the scene. In DIC analysis, a selected reference image corresponds to the initial configuration of the target object, with which subsequent images are compared. The RoI in the reference image is then divided into subsets. The location of each reference subset is tracked in the next images as illustrated schematically in Fig. 12.

The similarity between the reference subset and the target one in the deformed configuration is evaluated using a criterion based on cross-correlation or sum of squares of the differences of the pixel brightness distribution. The methodology is implemented in both open source tools [111,112] and licensed commercial software [113–115]. Notably, cross correlation is quite sensitive to changes in image resolution.

DIC procedures are made more reliable characterizing the monitored surface by a high contrast random isotropic speckle [116]. In dam monitoring, a natural speckle may form due to porosity and impurities in the concrete surface. However, the uncertainty of the results is more than halved by the use of fit-for purpose speckles [117]. The widespread use of DIC over the past three decades has seen the application of speckle patterns to regions ranging in size from millimeters to several meters [118].

Fig. 12. Illustration of subset tracking between reference and deformed configurations.

The basic assumption of DIC is that the pattern of the target surface does not change significantly during deformation. In this regard, variable lighting with weather conditions can represent an issue as rapid changes in brightness and shadow over the target area can produce blurry images. Most correlation criteria implemented in DIC procedures are robust to both uniform and scaled changes in illumination [76]. This has been proven experimentally in the tests performed in Refs. [119,120], where images were numerically modified to simulate the effects of varying brightness. In contrast, uneven change in illumination produces substantial errors in DIC results. This limitation was found in Ref. [91] as quick variations in shadows and brightness were observed during bridge monitoring. The issue of non-uniform lighting in DIC applications can be overcome by image correction techniques [121,122]. In fact, it can be assumed that the image is formed of a high-frequency pattern on a low-frequency background, which is affected by the non-uniform lighting. Image correction is based on eliminating the background from the image originally acquired.

Data quality can be enhanced by correction algorithms, possibly based on ML tools implemented in parallel units to improve computing efficiency [93–97]. In particular, pattern matching procedures allow the recovery of accurate quantitative information on displacement discontinuities at joints [123].

UAV based 3D DIC results can achieve sub-millimeter absolute errors with the application of speckle patterns [91]. The present challenge is to exploit the natural surface texture for DIC application to dams [124].

3.2. Photogrammetry

Photogrammetry uses a series of images to obtain a 3D reconstruction of the observation scene through the integration of point clouds. Usually, images of the monitored structure are acquired from several locations and at different angles. The procedure includes feature extraction, tracking, matching, and geometric verification of different images of the RoI. The next steps consist of incrementally register new images, triangulate scene points, filter outliers, and refine the reconstruction using bundle adjustment, i.e. simultaneous refining of the 3D coordinates describing the scene geometry. These procedures can potentially be addressed by ML approaches and provide reliable high-quality results [125].

Several software packages based on Structure-from-Motion (SfM) algorithm allow to obtain 3D reconstruction starting from photographs taken with conventional cameras. SfM automatically reconstructs the geometry of the scene, the positions and the orientation from which the photographs were taken, without the need to establish a point network with known 3D coordinates [86]. Therefore, SfM can work with images taken from random positions, which is a key advantage in the case of UAV-based acquisition [126]. Moreover, photographs obtained with variable camera orientation improve accuracy and reduce systematic errors [92,127]. However, the flight path of the UAV can influence the quality of the results. In this regard, a circular path has been found to work better than a rectangular one for 3D mapping [128]. In all cases, the accuracy of photogrammetric measurements can be increased by contrast enhancement techniques [129].

One relevant issue of photogrammetry is that the 3D reconstruction is obtained up to a scaling factor, which can be retrieved from the known distance between at least two points positioned in the scanned area, usually referred to as Ground Control Points (GCPs). Any error in the GCP coordinates affects the scaling precision of the 3D model.

GCPs are often located in the global reference system by means of GPS devices [84]. However, the use of GPS to estimate the GCPs location normally requires that a human operator reaches the GCPs. Therefore, the control points are usually located far from the dam surface.

3.3. Time of Flight cameras

Time of Flight cameras are promising tools for the structural health monitoring of existing dams. This measuring technology is based on the measurement of the time taken by an infrared radiation, generated by a scanner, to reach the target and be reflected back to the sensor [81]. This operation principle allows recovering the 3D shape of the target with a single camera and without the need of multiple image acquisition.

A typical order of magnitude of the uncertainty of ToF cameras mounted on drones is a few millimeters [81]. Therefore, ToF sensors can allow measuring relative displacements at joints due to seasonal changes with adequate accuracy, while monitoring small cracks is difficult.

One of the main specific limitations to ToF use is the sunlight disturbance, which can be alleviated by ToF sensors working at 900 nm wavelength, in the spectrum region where sunlight is strongly attenuated by the filtering effect of the atmosphere. UAV-based ToF measurements can also be affected by uncertainties due to vibrations associated with the mobile and unstable position of the 3D sensor, which can be reduced by specifically developed mitigation strategies [130].

3.4. Non-contact measurements of dam surface

The non-contact measurement techniques considered in this work cover a variety of applications. In all cases, the large amount of collected data can be handled by ML algorithms, while coupling with FE models may require specific post-processing of the measurements. The large extension of dam surfaces also poses some specific problems, illustrated in the following.

3.4.1. Relative displacements at joints

The output of 3D DIC analysis is a displacement field (in-plane and out-of-plane components) recovered from data discretely calculated for each subset (Fig. 12). Strains can be evaluated by finite differences with the application of a smoothing filter. In the presence of discontinuities at joints, or produced by cracks, the value of the computed strains is immaterial and it is sensitive to the dimension of the smoothing window. Still, the strain maps can help detecting and visualizing the position and extension of the discontinuities, as for example shown in Fig. 13 [131,132]. Images refer to a lab application.

The distribution of opening and sliding displacements at the joints can be calculated from the displacement values at the left and right sides of the discontinuity surfaces, Fig. 13(b). Image stitching allows considering large extension domains.

Photogrammetry and ToF sensors return a 3D point cloud (3D model) of each scan of the monitored region. A preliminary evaluation of their performance for the present purposes has been performed using a tiny drone (DJI Tello EDU drone) fitting a 5 MP camera and a Microsoft Azure Kinect device equipped with 12 MP color camera and 1 MP ToF camera. In the preliminary study the Azure Kinect was not mounted on the drone due to its insufficient payload; however, it can be accommodated in larger drones, as done for example in Ref. [81].

One application concerning ToF involves the two concrete blocks in reference and deformed configuration shown in Fig. 14. Prior to comparing the point clouds, a registration procedure is performed in order to determine the rigid transformation that aligns the two clouds, generally referred to different coordinates system as they correspond to different loading/environmental situations (and most probably a different time). Therefore, the camera location is usually different for the two acquisitions.

The presence of noise or large displacements in real applications may pose challenges in the registration process [133,134]. Furthermore, large data sets usually imply huge computational effort. Downsampling is often applied to reduce the point cloud size and mitigate the problem.

3.4.2. Spatial resolution and uncertainty

Spatial resolution of most of the state-of-the-art ToF cameras is poorer than the resolution of 2D digital cameras. The resolution of most ToF sensors is below 1 MP [135,136], while common 2D digital cameras can easily reach tens of MP. Testing the accuracy of ToF cameras from different producers [136] showed that an absolute error smaller than 2 mm could be achieved with a measuring range between 0.5 and 3 m.

Fig. 13. (a) Image of a monitored region with cracks; (b) displacement field (horizontal components) recovered by DIC; (c) map of the horizontal strain components.

Fig. 14. Two concrete blocks in reference (a, b, c) and deformed (d, e, f) configuration: (a, d) photographs of the objects; (b, e) top view of the textured (colored) point cloud; (c, f) 3D view of the textured point cloud.

Similarly, lab experiments showed that the accuracy of the ToF sensor employed in our preliminary investigations varied from 1.1 mm to 12.7 mm for a measuring range 1 m–5 m [137]. Anyway, ToF cameras must be close to the dam surface during scanning due to the limited infrared projector and sunlight disturbances. A close-up acquisition also benefits photogrammetry, which can therefore rely on the visibility of natural features such as dust, impurities, and scratches.

The spatial resolution of 3D DIC measurements strongly depends on camera characteristics, camera target distance, and processing parameters (most importantly, subset size and overlap between subsequent subsets). Generally, DIC relies on surface texture. Generating artificial speckles on dam surfaces is time-consuming and expensive. Therefore, the use of natural features is highly preferred, limiting the acquisition to close-up images as suggested for all three techniques considered here.

The lower limit of the displacement discontinuities measured by DIC in lab, with a speckle application of the monitored surface, shown in Fig. 13, was 0.02 mm. This value can be hardly reached in dam applications, but measuring relative displacements of the order of 1 mm can be feasible.

3.4.3. Texture information and processing time

Texture information can segment potentially damaged regions and allows combining data concerning consecutive regions lying on the large dam surfaces. This property is natively returned by photogrammetry, as opposed to the pure 3D coordinates of point clouds returned by ToF cameras. However, the combination of calibrated ToF and RGB cameras can overcome this limitation.

DIC, although not producing textured 3D point clouds, allows to associate every 3D point to the gray level of the images used to estimate the points. Therefore, all the considered techniques allow to obtain texture information, whereas photogrammetry produces more realistic results.

An advantage of ToF sensors with respect to photogrammetry and DIC is that ToF sensors allow to obtain the data in real time, while the other two techniques typically require relatively long processing to produce a high-resolution spatial model of the area of interest [138]. In any case, one factor currently hindering the implementation of UAV measurements in large applications is the payload limit [82], which imposes restrictions on flight duration and may lead to the need for multiple flights to complete the measurements.

4. Summary and conclusion

This contribution provides an overview on different experimental, computational and data management methodologies that can support the structural health assessment of existing dams. Large amounts of data about ambient conditions and structural response collected by sensor networks are recorded and analyzed, usually by statistical models or machine learning tools capable of defining warning thresholds and highlighting anomalous values. Both approaches were considered in the present work, showing that BRT can exhibit superior performance in predicting the structural response under normal operating conditions. In contrast, the HST methodology can significantly reduce the computational burden associated to the identification of constitutive and interfacial parameters that enter into simulation models grounded on the physics of the real facility, which are generally defined in the finite element framework. A specially designed inverse analysis procedure based on the separation of mechanical and thermal properties of the dam body and foundation is proposed.

The digital twins of existing dams, properly calibrated and validated, and continuously updated on the flow of monitoring data, permit to evaluate the effects of extreme environmental conditions, damage or collapse mechanisms that never actually occurred. The current status of the structure can thus be assessed with greater reliability, anticipating any likely degradation processes and identifying the type and severity of any possible damage.

In long-existing dams, the number of installed measuring devices may be limited and their positioning may not be optimal for reliable model calibration and anomaly detection. Under these conditions, complementary monitoring solutions based on emerging full-field measurement techniques performed by mounting various equipment on drones can be implemented.

The type and configuration of UAVs to be used strongly depend on the adopted measurement technique, which in turn is dictated by the accuracy to be achieved and by technological considerations. Standard commercial drones, equipped with a single 2D camera, can collect images for photogrammetric processing. In the case of 3D DIC or ToF sensors, custom equipment is usually required. Using DIC also imposes restrictions on the distance between camera and object, while photogrammetry can process images captured from random positions, thus offering greater flexibility in the measurement setup. In all cases, machine learning tools can reduce noise produced by vibrations and sub optimal environmental conditions to provide high-quality results. The above mentioned methodologies can be used to evaluate the relative displacements that develop at joints, for model calibration purposes. In fact, joint characteristics are affected by large uncertainties in aged dams. In this context, surface texture can be exploited to inspect large areas by combining data regarding partially overlapping consecutive regions. Our preliminary validation studies have highlighted the different precision obtainable from alternative remote measurement systems. However, continued technological advances will in all cases produce increasingly better results in a short time.

Overall, the simulation model of the structure, the installed sensor network, the data processing algorithms, an optimized plan of periodic complementary inspections and the digitalization of all available information define a complete and reliable surveillance system capable of automatically evaluate the health status of existing dams and diagnose any malfunctioning.

CRediT authorship contribution statement

Gabriella Bolzon: Writing – review & editing, Writing – original draft, Supervision, Methodology, Investigation, Conceptualization. Antonella Frigerio: Writing – review & editing, Supervision, Investigation. Mohammad Hajjar: Writing – review & editing, Visualization, Investigation. Caterina Nogara: Writing –review & editing, Visualization, Investigation, Data curation. Emanuele Zappa: Writing – review & editing, Writing – original draft, Supervision, Methodology.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

The authors gratefully thank their colleagues at Edison S.p.A. supporting this research.